Besides the atoms that make up our bodies and all of the objects we encounter in everyday life, the universe also contains mysterious dark matter and dark energy. The latter, which causes galaxies to accelerate away from one another, constitutes the majority of the universe’s energy and mass.

Ever since dark energy was discovered in 1998, scientists have been proposing theories to explain it—one is that dark energy produces a force that can be measured only where space has a very low density, like the regions between galaxies.

Paul Hamilton, a Univ. of California, Los Angeles (UCLA) assistant professor of physics and astronomy, reproduced the low-density conditions of space to precisely measure this force. His findings, which helped to reveal how strongly dark energy interacts with normal matter, appear online in Science.

Hamilton’s research focuses on the search for specific types of dark energy fields known as “chameleon fields,” which exhibit a force whose strength depends on the density of their surrounding environment. This force, if it were proven to exist, would be an example of a so-called “fifth force” beyond the four known forces of gravity, electromagnetism, and the strong and weak forces acting within atoms.

But this fifth force has never been detected in laboratory experiments, which prompted physicists to propose that when chameleon fields are in dense regions of space—for example, the Earth’s atmosphere—they shrink so dramatically that they become immeasurable.

Chameleon fields were first hypothesized in 2004 by Justin Khoury, a Univ. of Pennsylvania physicist and co-author of the Science paper, but it wasn’t until 2014 that English physicist Clare Burrage and colleagues proposed a methodology for testing their existence in a laboratory using atoms.

At the time, Hamilton was a postdoctoral researcher in the UC Berkeley laboratory of Holger Müller. His team already had a head start on investigating chameleon fields: They had independently developed an experiment using atoms to measure small forces.

Detecting the force of chameleon fields requires replicating the vacuum of space, Hamilton explained, because when they are near mass, the fields essentially hide. So the physicists built a vacuum chamber, roughly the size of a soccer ball, in which the pressure was one-trillionth that of the atmosphere we normally breathe. The researchers inserted atoms of cesium, a soft metal, into the vacuum chamber to detect forces.

“Atoms are the perfect test particles; they don’t weigh very much and they’re very small,” Hamilton said.

They also added to the vacuum chamber an aluminum sphere roughly the size of a marble, which functioned as a dense object to suppress the chameleon fields and allow the researchers to measure small forces. The atoms were then cooled to within 10 one-millionths of a degree above absolute zero, in order to keep them still enough for the scientists to perform the experiment.

Hamilton and his team collected data by shining a near-infrared laser into the vacuum chamber and measuring how the cesium atoms accelerated due to gravity and, potentially, another force.

“We used a light wave as a ruler to measure the acceleration of atoms,” Hamilton said.

This measurement was performed twice: once when the aluminum sphere was close to the atoms and once when it was farther away. According to scientific theory, chameleon fields would cause the atoms to accelerate differently depending on how far away the sphere was.

The researchers found no difference in the acceleration of the cesium atoms when they changed the location of the aluminum sphere. As a result, the researchers now have a better understanding of how strongly chameleon fields can interact with normal matter, but Hamilton will continue to use cold atoms to investigate theories of dark energy. His next experiment will aim to detect other possible forms of dark energy that cause forces that change with time.

Source: Univ. of California, Los Angeles

Monday, August 24, 2015

Carbon number crunching

A booming economy and population led China to emerge in 2006 as the global leader in fossil-fuel carbon emissions, a distinction it still maintains. But exactly how much carbon China releases has been a topic of debate, with recent estimates varying by as much as 15%.

“There’s great scrutiny of Chinese emissions now that they are the world’s largest fossil-fuel emitter,” said Tom Boden from the U.S. Dept. of Energy (DOE)’s Oak Ridge National Laboratory (ORNL). “Their economy has grown at such a fast rate, so naturally a lot of attention has turned to their emission estimates.”

As director of the Carbon Dioxide Information Analysis Center (CDIAC) at ORNL, Boden has been tracking the world’s carbon emissions for the past 25 years. CDIAC’s annual inventory of carbon released from fossil fuels by country has become a benchmark dataset for climate change scientists and policymakers.

Boden, ORNL’s Bob Andres, and Appalachian State Univ.’s Gregg Marland, a former ORNL scientist, recently lent their emissions data expertise to a new Nature study that reevaluated China’s emissions from 2000 to 2013. The study, led by Zhu Liu of Harvard Univ., illustrates how carbon emissions estimates take into account a complex set of variables.

“The fundamental calculation requires knowledge of the amount of fuel consumed and the efficiency of burning or the oxidation rate, but you also have to know the carbon content of the fuel,” Boden said. “The carbon content of coal is different than natural gas or crude oil, and it also differs based on where it comes from. Coal coming out of China has a different carbon composition than West Virginia coal.”

In this new study, the researchers used new provincial-level fossil fuel data and different carbon coefficients that are more representative of Chinese coal. China is also the world’s largest cement producer, and the new study reevaluated China’s releases of carbon dioxide from cement production as well. The team’s revised estimate found that China’s fossil-fuel and cement emissions are approximately 10 to 14% lower than other published inventories including CDIAC’s published estimates. Boden, Andres and Marland helped verify the team’s calculations based on their years of carbon accounting experience.

Although the corrected estimate does not change China’s status as the world’s largest emitter, it will benefit future efforts to project future climates.

“Differences on an order of 15% become important at global and regional carbon cycle scales,” Boden said. “For a country that’s emitting something on the order of two gigatons of carbon per year and with growing energy demands and large reserves of coal, it’s important to quantify China’s fossil-fuel emissions well.”

Source: Oak Ridge National Laboratory

Google Tests Six-Foot Humanoid Robot with Walk in Woods

Boston Dynamics, a robotics company owned by Google, posted footage of a prototype of their robot, named Atlas, taking a walk through the woods.

Atlas is designed, “to negotiate outdoor, rough terrain in a bipedal manner, while being able to climb using hands and feet as a human would,” writes The Guardian.

Marc Raibert, the founder of Boston Dynamics, provided an update on Atlas’s development to an audience at the 11th annual Fab Lab Conference and Symposium in Boston earlier this month.

He explained his team wanted to see how Atlas would perform in a wooded area because it was an unpredictable environment compared to the company’s laboratory.

Watch below.

Source:rdmag.com

Atlas is designed, “to negotiate outdoor, rough terrain in a bipedal manner, while being able to climb using hands and feet as a human would,” writes The Guardian.

Marc Raibert, the founder of Boston Dynamics, provided an update on Atlas’s development to an audience at the 11th annual Fab Lab Conference and Symposium in Boston earlier this month.

He explained his team wanted to see how Atlas would perform in a wooded area because it was an unpredictable environment compared to the company’s laboratory.

Watch below.

Source:rdmag.com

X-ray duo’s research helps launch human trial for treatment of arsenic poisoning

Graham George and Ingrid Pickering, a husband and wife x-ray research team, have worked for decades to understand how contaminants in water and soil are taken up by the body and affect human health. Much of that research has taken place at the Stanford Synchrotron Radiation Lightsource (SSRL), a DOE Office of Science User Facility at SLAC National Accelerator Laboratory, where both are former staff scientists.

Now George and Pickering are co-leading a new study in Bangladesh that is testing whether giving people selenium supplements can protect them from arsenic poisoning caused by naturally contaminated drinking water, which affects more than 100 million people worldwide and can lead to cancer, liver disease and other severe health problems.

The clinical trial, which runs through July 2016, is paid for by the Canadian federal government and is sponsored by the Univ. of Saskatchewan, where Pickering and George have been professors since 2003.

Fighting poison with poison?

The idea for the treatment dates back to the 1930s, when a scientist discovered that rats fed wheat containing enough selenium to kill them could actually survive if they were also given arsenic-contaminated water.

Decades later, Jürgen Gailer, a scientist at the Univ. of Calgary in Canada who was conducting related research on this biological oddity, asked Pickering and George if they could use x-ray techniques to find out how combining these two toxins could seemingly cancel out their dangerous effects in mammals.

“We discovered the molecule responsible for this effect,” George said.

In a series of x-ray experiments that began over a decade ago at SSRL, George and Pickering identified a compound that forms in rabbits injected with both arsenic and selenium. When the rabbits excreted this compound it apparently removed the toxins.

The study, published in 2000, also concluded that people exposed to arsenic-contaminated water might get some protection by increasing their selenium intake, and it offered possible clues to why arsenic poisoning can be carcinogenic.

In the current trial in Bangladesh, one group of men is receiving sodium selenite, a compound that could potentially help to cleanse arsenic from their systems, and another group of men is receiving a placebo. This trial provides stricter controls on how the drugs are administered than a previous trial that was not successful.

Brain diseases, using plants for cleanup among latest projects

“Our focus has been on understanding the roles of metals in biology,” George said, as many proteins that carry out important functions in biology contain metallic elements. “We want to understand the detailed structure of metals in proteins’ active sites, and see how they’re localized in tissues.”

In addition to their ongoing arsenic and selenium work, the couple also are studying how plants can potentially be used to take contaminants out of the soil that are potentially hazardous to human health.

“Wouldn’t it be great if we could plant a crop of plants that can harvest contaminants into the roots and take them away?” Pickering said.

Another thread of their research uses x-ray experiments to explore the roles metal ions, and in particular copper ions, play in diseases related to misfolded proteins, such as Alzheimer’s and prion diseases—mad cow disease is one example.

George, who first visited SSRL in 1983, is a pioneer in biological studies using x-ray absorption spectroscopy to explore the atomic structure and arrangement of electrons in samples. This technique is also applicable to other fields, like chemistry and materials science. Pickering has focused her research on metals in the environment and how they impact biology and human health. She leads a synchrotron training program at her university for graduate students and postdoctoral researchers pursuing health-related research.

“We end up on each other’s papers a lot,” George says, because of natural overlaps in their research.

A long history at SSRL

Both originally from the United Kingdom, the couple met in New Jersey while working as researchers at Exxon and have been married for 23 years. They worked as staff scientists at SLAC’s SSRL from 1992 to 2003, and still come to SLAC to conduct experiments at SSRL.

During their recent six-month research sabbatical at SSRL, they conducted several x-ray experiments related to their biological research.

“We’ve been doing something we haven’t done in a long time, which is work on an x-ray beamline together,” said George, as work and family schedules have complicated their experimental time.

They also worked on software development to update an x-ray data analysis tool that George created decades ago, called EXAFSPAK, that is in use at synchrotrons around the globe. George said his brother, Martin, and Allyson Aranda, both SSRL scientific programmers, are also working on this ongoing update, which will add new features and make the software easier to use.

Future x-ray work

Graham George said he is excited about the new research possibilities using x-ray lasers like SLAC’s Linac Coherent Light Source, which has x-ray pulses a billion times brighter than synchrotron light. “LCLS is going to change everything for many people in science. It makes possible the ‘wow’ kind of science,” he said, adding that synchrotrons like SSRL will continue to be the workhorses in x-ray research.

The couple said they will continue to be drawn to SSRL for their own work.

“It’s a ‘can-do’ place. That translates to a really great user experience,” Pickering said. “People here are really keen to make sure your experiment is successful. That really does count for a lot.”

George added, “It doesn’t just have to do with the quality of the x-ray beams. It also has to do with good ideas and a good fundamental approach, and the systematic, careful nature of the people who work here.”

Source: SLAC National Accelerator Laboratory

Now George and Pickering are co-leading a new study in Bangladesh that is testing whether giving people selenium supplements can protect them from arsenic poisoning caused by naturally contaminated drinking water, which affects more than 100 million people worldwide and can lead to cancer, liver disease and other severe health problems.

The clinical trial, which runs through July 2016, is paid for by the Canadian federal government and is sponsored by the Univ. of Saskatchewan, where Pickering and George have been professors since 2003.

Fighting poison with poison?

The idea for the treatment dates back to the 1930s, when a scientist discovered that rats fed wheat containing enough selenium to kill them could actually survive if they were also given arsenic-contaminated water.

Decades later, Jürgen Gailer, a scientist at the Univ. of Calgary in Canada who was conducting related research on this biological oddity, asked Pickering and George if they could use x-ray techniques to find out how combining these two toxins could seemingly cancel out their dangerous effects in mammals.

“We discovered the molecule responsible for this effect,” George said.

In a series of x-ray experiments that began over a decade ago at SSRL, George and Pickering identified a compound that forms in rabbits injected with both arsenic and selenium. When the rabbits excreted this compound it apparently removed the toxins.

The study, published in 2000, also concluded that people exposed to arsenic-contaminated water might get some protection by increasing their selenium intake, and it offered possible clues to why arsenic poisoning can be carcinogenic.

In the current trial in Bangladesh, one group of men is receiving sodium selenite, a compound that could potentially help to cleanse arsenic from their systems, and another group of men is receiving a placebo. This trial provides stricter controls on how the drugs are administered than a previous trial that was not successful.

Brain diseases, using plants for cleanup among latest projects

“Our focus has been on understanding the roles of metals in biology,” George said, as many proteins that carry out important functions in biology contain metallic elements. “We want to understand the detailed structure of metals in proteins’ active sites, and see how they’re localized in tissues.”

In addition to their ongoing arsenic and selenium work, the couple also are studying how plants can potentially be used to take contaminants out of the soil that are potentially hazardous to human health.

“Wouldn’t it be great if we could plant a crop of plants that can harvest contaminants into the roots and take them away?” Pickering said.

Another thread of their research uses x-ray experiments to explore the roles metal ions, and in particular copper ions, play in diseases related to misfolded proteins, such as Alzheimer’s and prion diseases—mad cow disease is one example.

George, who first visited SSRL in 1983, is a pioneer in biological studies using x-ray absorption spectroscopy to explore the atomic structure and arrangement of electrons in samples. This technique is also applicable to other fields, like chemistry and materials science. Pickering has focused her research on metals in the environment and how they impact biology and human health. She leads a synchrotron training program at her university for graduate students and postdoctoral researchers pursuing health-related research.

“We end up on each other’s papers a lot,” George says, because of natural overlaps in their research.

A long history at SSRL

Both originally from the United Kingdom, the couple met in New Jersey while working as researchers at Exxon and have been married for 23 years. They worked as staff scientists at SLAC’s SSRL from 1992 to 2003, and still come to SLAC to conduct experiments at SSRL.

During their recent six-month research sabbatical at SSRL, they conducted several x-ray experiments related to their biological research.

“We’ve been doing something we haven’t done in a long time, which is work on an x-ray beamline together,” said George, as work and family schedules have complicated their experimental time.

They also worked on software development to update an x-ray data analysis tool that George created decades ago, called EXAFSPAK, that is in use at synchrotrons around the globe. George said his brother, Martin, and Allyson Aranda, both SSRL scientific programmers, are also working on this ongoing update, which will add new features and make the software easier to use.

Future x-ray work

Graham George said he is excited about the new research possibilities using x-ray lasers like SLAC’s Linac Coherent Light Source, which has x-ray pulses a billion times brighter than synchrotron light. “LCLS is going to change everything for many people in science. It makes possible the ‘wow’ kind of science,” he said, adding that synchrotrons like SSRL will continue to be the workhorses in x-ray research.

The couple said they will continue to be drawn to SSRL for their own work.

“It’s a ‘can-do’ place. That translates to a really great user experience,” Pickering said. “People here are really keen to make sure your experiment is successful. That really does count for a lot.”

George added, “It doesn’t just have to do with the quality of the x-ray beams. It also has to do with good ideas and a good fundamental approach, and the systematic, careful nature of the people who work here.”

Source: SLAC National Accelerator Laboratory

Paper-based Tests for Infectious Diseases

In Kimberley Hamad-Schifferli’s hand, the device looks like a white iPod Mini, small enough to fit in a pants pocket. However, this device is actually a paper-based, rapid diagnostic test capable of finding the presence of infectious diseases, including Ebola, dengue and yellow fever.

“(We) took advantage of the fact that nanoparticles have different colors if you make them different sizes,” said Hamad-Schifferli, of the Massachusetts Institute of Technology (MIT), who presented the device at the 250th National Meeting & Exposition of the American Chemical Society (ACS). “If you put nanoparticles on the antibodies that are inside the test, it gives rise to a different color on the test line.”

According to the Centers for Disease Control and Prevention, the 2014 Ebola outbreak in West Africa killed nearly 11,300 people in Guinea, Sierra Leone and Liberia. Total suspected cases numbered close to 28,000.

“Our main goal is to get this into the hands of as many people as possible,” Hamad-Schifferli said.

She emphasized the test wasn’t designed as a replacement for PCR (polymerase chain reaction) and ELISA (enzyme-linked immunosorbent assay) tests, which are more accurate, but require laboratory settings. Rather, the new test is meant as a first screening for areas without running water or electricity.

According to ACS, PCR and ELISA “are bioassays that detect pathogens directly or indirectly, respectively.”

The test uses silver nanoparticles. Slight changes in size elicit different colors. Red was assigned to Ebola, green to dengue fever and orange to yellow fever. The colored nanoparticles are attached to antibodies that bind to a biomarker of interest. According to Hamad-Schifferli, the blood sample is introduced to a “cotton weave pad,” and wicks through the device as results develop. The results are read from a nitrocellulose membrane, the paper part of the device.

She reported the sensitivity and specificity of the test as 97% accurate. The test takes 10 min to complete.

At $5 per strip, the total test costs $20, but Hamad-Schifferli said mass production could reduce the cost. Currently, the research team is consulting with companies about making the product commercially available.

While the test performs well in the lab setting, the team, comprised of people from MIT, Harvard Medical School and the U.S. Food and Drug Administration, found problems with humidity and temperatures of 36 C. By using foil packs as a sealing, the test was stable for three months in the aforementioned environment. Once opened, it must be used immediately, Hamad-Schifferli said.

Tests have been deployed to clinical settings in Colombia, Honduras and Africa. Hamad-Schifferli said it would be a few months before her team receives feedback regarding the test’s performance in the field.

Source:http://www.rdmag.com/articles/2015/08/paper-based-tests-infectious-diseases

“(We) took advantage of the fact that nanoparticles have different colors if you make them different sizes,” said Hamad-Schifferli, of the Massachusetts Institute of Technology (MIT), who presented the device at the 250th National Meeting & Exposition of the American Chemical Society (ACS). “If you put nanoparticles on the antibodies that are inside the test, it gives rise to a different color on the test line.”

According to the Centers for Disease Control and Prevention, the 2014 Ebola outbreak in West Africa killed nearly 11,300 people in Guinea, Sierra Leone and Liberia. Total suspected cases numbered close to 28,000.

“Our main goal is to get this into the hands of as many people as possible,” Hamad-Schifferli said.

She emphasized the test wasn’t designed as a replacement for PCR (polymerase chain reaction) and ELISA (enzyme-linked immunosorbent assay) tests, which are more accurate, but require laboratory settings. Rather, the new test is meant as a first screening for areas without running water or electricity.

According to ACS, PCR and ELISA “are bioassays that detect pathogens directly or indirectly, respectively.”

The test uses silver nanoparticles. Slight changes in size elicit different colors. Red was assigned to Ebola, green to dengue fever and orange to yellow fever. The colored nanoparticles are attached to antibodies that bind to a biomarker of interest. According to Hamad-Schifferli, the blood sample is introduced to a “cotton weave pad,” and wicks through the device as results develop. The results are read from a nitrocellulose membrane, the paper part of the device.

She reported the sensitivity and specificity of the test as 97% accurate. The test takes 10 min to complete.

At $5 per strip, the total test costs $20, but Hamad-Schifferli said mass production could reduce the cost. Currently, the research team is consulting with companies about making the product commercially available.

While the test performs well in the lab setting, the team, comprised of people from MIT, Harvard Medical School and the U.S. Food and Drug Administration, found problems with humidity and temperatures of 36 C. By using foil packs as a sealing, the test was stable for three months in the aforementioned environment. Once opened, it must be used immediately, Hamad-Schifferli said.

Tests have been deployed to clinical settings in Colombia, Honduras and Africa. Hamad-Schifferli said it would be a few months before her team receives feedback regarding the test’s performance in the field.

Source:http://www.rdmag.com/articles/2015/08/paper-based-tests-infectious-diseases

Medical, biofuel advances possible with new gene regulation tool

Recent work by Los Alamos National Laboratory experimental and theoretical biologists describes a new method of controlling gene expression. The key is a tunable switch made from a small non-coding RNA molecule that could have value for medical and even biofuel production purposes.

“Living cells have multiple mechanisms to control and regulate processes—many of which involve regulating the expression of genes,” said lead project scientist Clifford Unkefer of the Laboratory’s Bioscience Div. “Scientists have investigated ways of synthetically altering gene expression for alternative purposes, such as biosynthesis of therapeutics or chemicals. Much of this work has focused on regulating translation of genes. Now we have a way to do this, using a riboregulator.”

Synthetic biology researchers have been attempting to engineer bacteria capable of carrying out a range of important medical and industrial functions, from manufacturing pharmaceuticals to detoxifying pollutants and increasing production of biofuels.

“Cells rely on a complex network of gene switches,” said coauthor Karissa Sanbonmatsu. “Early efforts in synthetic biology presumed these switches were akin to turning a light bulb on or off. We have produced switches that more closely resemble continuous dimmers, carefully regulating gene expression with exquisite control,” she said.

Noted Scott Hennelly, another research team member, “Because these riboregulators can be used to tune gene expression and can be targeted specifically to independently regulate all of the genes encoding a metabolic pathway, they give the synthetic biologist the tools necessary to optimize flux in an engineered metabolic pathway and will find wide application in synthetic biology.”

The team’s work describes riboregulators that are made up of made up of a cis-repressor (crRNA) and a trans-activator RNAs (taRNA). The crRNA naturally folds to a structure that sequesters the ribosomal binding sequence, preventing translation of the downstream gene; thus blocking expression of the gene.

The taRNA is transcribed independently and the binding and subsequent structural transition between these two regulatory RNA elements dictates whether or not the transcribed mRNA will be translated into the protein product. In this project, the team demonstrated a cis-repressor that completely shut off translation of antibiotic-resistance reporters and the trans-activator that restores translation.

“They demonstrated that the level of translation can be tuned based on subtle changes in the primary sequence regulatory region of the taRNA; thus it is possible to achieve translational control of gene expression over a wide dynamic range,” said Hennelly.

Finally they created a modular system that includes a targeting sequence with the capacity to target specific genes independently giving these riboregulators the ability regulate multiple genes independently.

Source: Los Alamos National Laboratory

“Living cells have multiple mechanisms to control and regulate processes—many of which involve regulating the expression of genes,” said lead project scientist Clifford Unkefer of the Laboratory’s Bioscience Div. “Scientists have investigated ways of synthetically altering gene expression for alternative purposes, such as biosynthesis of therapeutics or chemicals. Much of this work has focused on regulating translation of genes. Now we have a way to do this, using a riboregulator.”

Synthetic biology researchers have been attempting to engineer bacteria capable of carrying out a range of important medical and industrial functions, from manufacturing pharmaceuticals to detoxifying pollutants and increasing production of biofuels.

“Cells rely on a complex network of gene switches,” said coauthor Karissa Sanbonmatsu. “Early efforts in synthetic biology presumed these switches were akin to turning a light bulb on or off. We have produced switches that more closely resemble continuous dimmers, carefully regulating gene expression with exquisite control,” she said.

Noted Scott Hennelly, another research team member, “Because these riboregulators can be used to tune gene expression and can be targeted specifically to independently regulate all of the genes encoding a metabolic pathway, they give the synthetic biologist the tools necessary to optimize flux in an engineered metabolic pathway and will find wide application in synthetic biology.”

The team’s work describes riboregulators that are made up of made up of a cis-repressor (crRNA) and a trans-activator RNAs (taRNA). The crRNA naturally folds to a structure that sequesters the ribosomal binding sequence, preventing translation of the downstream gene; thus blocking expression of the gene.

The taRNA is transcribed independently and the binding and subsequent structural transition between these two regulatory RNA elements dictates whether or not the transcribed mRNA will be translated into the protein product. In this project, the team demonstrated a cis-repressor that completely shut off translation of antibiotic-resistance reporters and the trans-activator that restores translation.

“They demonstrated that the level of translation can be tuned based on subtle changes in the primary sequence regulatory region of the taRNA; thus it is possible to achieve translational control of gene expression over a wide dynamic range,” said Hennelly.

Finally they created a modular system that includes a targeting sequence with the capacity to target specific genes independently giving these riboregulators the ability regulate multiple genes independently.

Source: Los Alamos National Laboratory

Engineers improving safety, reliability of batteries

The next big step forward in the quest for sustainable, more efficient energy is tantalizingly within reach thanks to research being led by Univ. of Tennessee (UT)’s Joshua Sangoro.

Sangoro, an assistant professor of chemical and biomolecular engineering, heads a group devoted to the study of soft materials—substances that can be manipulated while at room temperature, including liquids, polymers and foams.

While such materials have obvious use in fields like medicine or cosmetics, it’s their potential to reshape our use of energy that has the team’s focus.

“By changing the design of batteries and the substances used in them we can improve safety, performance, and reliability,” said Sangoro. “If you think about everything we use that needs power, we can affect practically everything in a big, big way.”

In the simplest terms, batteries rely on electrolytes within them to carry their charge from one electrode to another and in the process provide electric energy to the device they are powering. The most commonly used electrolytes are based on lithium salts combined with organic additives.

While lithium-ion batteries are becoming more efficient, they also produce great amounts of heat, which can trigger unwanted chemical reactions within the batteries. These reactions yield toxic and highly flammable substances such as hydrofluoric acid gas.

That resulting stress from such gases has led to fires and damaged cell phones, laptops and other electrical devices and was even thought to be the cause of some high-profile fires on airliners.

Sangoro’s team is developing a new kind of electrolyte that cuts down on many of those problems.

“We are developing ionic liquid systems to take the place of traditional electrolytes in batteries,” said Sangoro. “Not only are they nonflammable, but they are also much more stable in wide temperature ranges, and they have very low vapor pressure.

“They are more reliable and safer—without sacrificing the power requirements of the batteries.”

The other big advantage of the breakthrough is its adaptability.

By molding the substances into ultrathin films, the team has found a way to make power sources with more flexible structures.

Doing so increases the opportunity for their use not just in portable devices but also in solar cells, transistors, or anything that needs a portable power source.

“Based on the ionic liquids we now know, we calculate that there are ten quintillion possible combinations that could be used,” said Sangoro. “We haven’t even scratched the surface yet.”

Source: The Univ. of Tennessee, Knoxville

Sangoro, an assistant professor of chemical and biomolecular engineering, heads a group devoted to the study of soft materials—substances that can be manipulated while at room temperature, including liquids, polymers and foams.

While such materials have obvious use in fields like medicine or cosmetics, it’s their potential to reshape our use of energy that has the team’s focus.

“By changing the design of batteries and the substances used in them we can improve safety, performance, and reliability,” said Sangoro. “If you think about everything we use that needs power, we can affect practically everything in a big, big way.”

In the simplest terms, batteries rely on electrolytes within them to carry their charge from one electrode to another and in the process provide electric energy to the device they are powering. The most commonly used electrolytes are based on lithium salts combined with organic additives.

While lithium-ion batteries are becoming more efficient, they also produce great amounts of heat, which can trigger unwanted chemical reactions within the batteries. These reactions yield toxic and highly flammable substances such as hydrofluoric acid gas.

That resulting stress from such gases has led to fires and damaged cell phones, laptops and other electrical devices and was even thought to be the cause of some high-profile fires on airliners.

Sangoro’s team is developing a new kind of electrolyte that cuts down on many of those problems.

“We are developing ionic liquid systems to take the place of traditional electrolytes in batteries,” said Sangoro. “Not only are they nonflammable, but they are also much more stable in wide temperature ranges, and they have very low vapor pressure.

“They are more reliable and safer—without sacrificing the power requirements of the batteries.”

The other big advantage of the breakthrough is its adaptability.

By molding the substances into ultrathin films, the team has found a way to make power sources with more flexible structures.

Doing so increases the opportunity for their use not just in portable devices but also in solar cells, transistors, or anything that needs a portable power source.

“Based on the ionic liquids we now know, we calculate that there are ten quintillion possible combinations that could be used,” said Sangoro. “We haven’t even scratched the surface yet.”

Source: The Univ. of Tennessee, Knoxville

Digital Information in DNA Strands

In the Middles Ages, a majority of Greek and Roman texts were lost. But in large monasteries, monks dutifully hand copied texts in rooms called scriptoriums. Working in silence, they preserved works, which may have otherwise been lost.

According to Robert Grass, of ETH Zürich, the monks were preserving information for future generations. In a digital age, the question facing modern society is how to preserve intangible information.

“Most of us don’t have photographs, or real photographs of the pictures we take. We don’t have paper copies of the work we perform. Everything is online and digital,” Grass said at the 250th National Meeting & Exposition of the American Chemical Society (ACS).

Grass and colleagues have addressed this problem by demonstrating information encapsulated in the form of DNA can be preserved for at least 2,000 years.

DNA, according to Grass, is a logical route of storage due to its track record storing information over hundreds of thousands of years. In 2013, National Geographic reported scientists sequenced a genome from the leg bone of a 700,000-year-old horse.

“We take this idea of storing information in DNA,” Grass said. “We know that it is extremely stable, if it’s stored correctly, over hundreds of thousands of years. And we then have to think, ‘Well, how do we not store genomic information, but digital information?’”

“More or less, you go from translating something which is a sequence of 0s and 1s to…a sequence of A, C, T and Gs,” he added.

According to ACS, a hard drive the size of a paperback book can store five terabytes of information, and last 50 years. But, in theory, a fraction of an ounce of DNA is capable of storing more than 300,000 terabytes.

Using silica glass, “We chemically entrap DNA molecules, and in that entrapped state they are almost as stable as they are when they are entrapped in fossil(s),” said Grass.

According to ETH Zürich, the researchers encoded DNA with text from Switzerland’s Federal Charter of 1291, and “The Methods of Mechanical Theorems” by Archimedes.

Afterwards, the silica spheres were subjected to temperatures of 160 F for one week, meant to simulate storage of 2,000 years at 50 F. The sample was error-free when decoded, Grass said. The team implemented data redundancy in their storage technology to correct any errors during the decoding stage.

“Right now, we can read everything that’s in that (DNA) drop,” said Grass. “But I can’t point to a specific place within the drop and read only one file.” Making the DNA storage searchable is the next task for the team.

But don’t expect to see this technology any time soon. According to Grass, storing a few megabytes costs thousands of dollars. It’s certainly one of the biggest constraints in this field, in any academic or new technology field, he said.

According to Robert Grass, of ETH Zürich, the monks were preserving information for future generations. In a digital age, the question facing modern society is how to preserve intangible information.

“Most of us don’t have photographs, or real photographs of the pictures we take. We don’t have paper copies of the work we perform. Everything is online and digital,” Grass said at the 250th National Meeting & Exposition of the American Chemical Society (ACS).

Grass and colleagues have addressed this problem by demonstrating information encapsulated in the form of DNA can be preserved for at least 2,000 years.

DNA, according to Grass, is a logical route of storage due to its track record storing information over hundreds of thousands of years. In 2013, National Geographic reported scientists sequenced a genome from the leg bone of a 700,000-year-old horse.

“We take this idea of storing information in DNA,” Grass said. “We know that it is extremely stable, if it’s stored correctly, over hundreds of thousands of years. And we then have to think, ‘Well, how do we not store genomic information, but digital information?’”

“More or less, you go from translating something which is a sequence of 0s and 1s to…a sequence of A, C, T and Gs,” he added.

According to ACS, a hard drive the size of a paperback book can store five terabytes of information, and last 50 years. But, in theory, a fraction of an ounce of DNA is capable of storing more than 300,000 terabytes.

Using silica glass, “We chemically entrap DNA molecules, and in that entrapped state they are almost as stable as they are when they are entrapped in fossil(s),” said Grass.

According to ETH Zürich, the researchers encoded DNA with text from Switzerland’s Federal Charter of 1291, and “The Methods of Mechanical Theorems” by Archimedes.

Afterwards, the silica spheres were subjected to temperatures of 160 F for one week, meant to simulate storage of 2,000 years at 50 F. The sample was error-free when decoded, Grass said. The team implemented data redundancy in their storage technology to correct any errors during the decoding stage.

“Right now, we can read everything that’s in that (DNA) drop,” said Grass. “But I can’t point to a specific place within the drop and read only one file.” Making the DNA storage searchable is the next task for the team.

But don’t expect to see this technology any time soon. According to Grass, storing a few megabytes costs thousands of dollars. It’s certainly one of the biggest constraints in this field, in any academic or new technology field, he said.

Venus flytrap inspires development of folding “snap” geometry

Inspired by natural "snapping" systems like Venus flytrap leaves and hummingbird beaks, a team led by physicist Christian Santangelo at the Univ. of Massachusetts Amherst has developed a way to use curved creases to give thin curved shells a fast, programmable snapping motion. The new technique avoids the need for complicated materials and fabrication methods when creating structures with fast dynamics.

The advance should help materials scientists and engineers who wish to design structures that can rapidly switch shape and properties, says Santangelo. He and colleagues, including polymer scientist Ryan Hayward, point out that until now, there has not been a general geometric design rule for creating a snap between stable states of arbitrarily curved surfaces.

"A lot of plants and animals take advantage of elasticity to move rapidly, yet we haven't really known how to use this in artificial devices," says Santangelo. "This gives us a way of using geometry to design ultrafast, mechanical switches that can be used, for example, in robots." Details of the new geometry appear in an early online issue of Proceedings of the National Academy of Sciences.

The authors point out, "While the well-known rules and mechanisms behind folding a flat surface have been used to create deployable structures and shape transformable materials, folding of curved shells is still not fundamentally understood." Though the simultaneous coupling of bending and stretching that deforms a shell naturally gives items "great stability for engineering applications," they add, it makes folding a curved surface not a trivial task.

Santangelo and colleagues' paper outlines the geometry of folding a creased shell and demonstrates the conditions under which it may fold smoothly. They say the new technique "will find application in designing structures over a wide range of length scales, including self-folding materials, tunable optics and switchable frictional surfaces for microfluidics," such as are used in inkjet printer heads and lab-on-a-chip technology.

The authors explain, "Shape programmable structures have recently used origami to reconfigure using a smooth folding motion, but are hampered by slow speeds and complicated material assembly." They say the fast snapping motion they developed "represents a major step in generating programmable materials with rapid actuation capabilities."

Their geometric design work "lays the foundation for developing non-Euclidean origami, in which multiple folds and vertices combine to create new structures," write Santangelo and colleagues, and the principles and methods "open the door for developing design paradigms independent of length-scale and material system."

Source: Univ. of Massachusetts Amherst

The advance should help materials scientists and engineers who wish to design structures that can rapidly switch shape and properties, says Santangelo. He and colleagues, including polymer scientist Ryan Hayward, point out that until now, there has not been a general geometric design rule for creating a snap between stable states of arbitrarily curved surfaces.

"A lot of plants and animals take advantage of elasticity to move rapidly, yet we haven't really known how to use this in artificial devices," says Santangelo. "This gives us a way of using geometry to design ultrafast, mechanical switches that can be used, for example, in robots." Details of the new geometry appear in an early online issue of Proceedings of the National Academy of Sciences.

The authors point out, "While the well-known rules and mechanisms behind folding a flat surface have been used to create deployable structures and shape transformable materials, folding of curved shells is still not fundamentally understood." Though the simultaneous coupling of bending and stretching that deforms a shell naturally gives items "great stability for engineering applications," they add, it makes folding a curved surface not a trivial task.

Santangelo and colleagues' paper outlines the geometry of folding a creased shell and demonstrates the conditions under which it may fold smoothly. They say the new technique "will find application in designing structures over a wide range of length scales, including self-folding materials, tunable optics and switchable frictional surfaces for microfluidics," such as are used in inkjet printer heads and lab-on-a-chip technology.

The authors explain, "Shape programmable structures have recently used origami to reconfigure using a smooth folding motion, but are hampered by slow speeds and complicated material assembly." They say the fast snapping motion they developed "represents a major step in generating programmable materials with rapid actuation capabilities."

Their geometric design work "lays the foundation for developing non-Euclidean origami, in which multiple folds and vertices combine to create new structures," write Santangelo and colleagues, and the principles and methods "open the door for developing design paradigms independent of length-scale and material system."

Source: Univ. of Massachusetts Amherst

Researchers developing next generation of high-power lasers

Researchers at the Univ. of Strathclyde are developing groundbreaking plasma based light amplifiers that could replace traditional high-power laser amplifiers.

The research group at the Glasgow-based university are leading efforts to take advantage of plasma, the ubiquitous medium that makes up most of the universe, to make the significant scientific breakthrough.

The next generation of high-power lasers should be able to crack the vacuum to produce real particles from the sea of virtual particles. Example of these types of lasers can be found at the Extreme Light Infrastructure in Bucharest, Prague and Szeged, which are pushing the boundaries of what can be done with high intensity light.

Prof. Dino Jaroszynski and Dr. Gregory Vieux from Strathclyde's Faculty of Science hope that the developments can produce a very compact and robust light amplifier.

Prof. Jaroszynski said: "The lasers currently being used are huge and expensive devices, requiring optical elements that can be more than a meter in diameter. Large laser beams are required because traditional optical materials are easily damaged by high intensity laser beams.

"Plasma is completely broken down atoms, which are separated into their constituent parts of positively charged ions and very light and mobile electrons, which have unique properties in that they respond easily to laser fields.

"We are investigating the limitations of this method of amplifying short laser pulses in plasma and hope this will lead to a more compact and cost effective solution."

The research was published in Scientific Reports. It suggests that electron trapping and wavebreaking are the main physical processes limiting energy transfer efficiency in plasma-based amplifiers.

The authors have demonstrated that pump chirp (chirping similar to that of a Swanni or slide whistle) and finite plasma temperature reduce the amplification factor. Moreover, the electron thermal distribution (the way the particle velocities are distributed) leads to particle trapping (particles get stuck in troughs of the waves) and a nonlinear frequency shift (the color of the amplified lights changes), which further reduces amplification. The team also suggest methods for achieving higher efficiencies.

Source: Univ. of Strathclyde

The research group at the Glasgow-based university are leading efforts to take advantage of plasma, the ubiquitous medium that makes up most of the universe, to make the significant scientific breakthrough.

The next generation of high-power lasers should be able to crack the vacuum to produce real particles from the sea of virtual particles. Example of these types of lasers can be found at the Extreme Light Infrastructure in Bucharest, Prague and Szeged, which are pushing the boundaries of what can be done with high intensity light.

Prof. Dino Jaroszynski and Dr. Gregory Vieux from Strathclyde's Faculty of Science hope that the developments can produce a very compact and robust light amplifier.

Prof. Jaroszynski said: "The lasers currently being used are huge and expensive devices, requiring optical elements that can be more than a meter in diameter. Large laser beams are required because traditional optical materials are easily damaged by high intensity laser beams.

"Plasma is completely broken down atoms, which are separated into their constituent parts of positively charged ions and very light and mobile electrons, which have unique properties in that they respond easily to laser fields.

"We are investigating the limitations of this method of amplifying short laser pulses in plasma and hope this will lead to a more compact and cost effective solution."

The research was published in Scientific Reports. It suggests that electron trapping and wavebreaking are the main physical processes limiting energy transfer efficiency in plasma-based amplifiers.

The authors have demonstrated that pump chirp (chirping similar to that of a Swanni or slide whistle) and finite plasma temperature reduce the amplification factor. Moreover, the electron thermal distribution (the way the particle velocities are distributed) leads to particle trapping (particles get stuck in troughs of the waves) and a nonlinear frequency shift (the color of the amplified lights changes), which further reduces amplification. The team also suggest methods for achieving higher efficiencies.

Source: Univ. of Strathclyde

Chemical Compound Reduces Alcohol Cravings

Nearly 88,000 people die annually from an alcohol-related cause in the U.S., according to the National Institute of Alcohol Abuse and Alcoholism. Nationally, alcohol is the third leading preventable cause of death. The National Council on Alcoholism and Drug Dependence reports that 17.6 million people in the country suffer from alcohol abuse, or dependence.

“Alcoholism is a major problem in the U.S.,” said V. V. N. Phani Babu Tiruveedhula, of the Univ. of Wisconsin, Milwaukee. “Alcohol abuse costs almost $220 billion to the U.S. economy every year. That’s a shocking number. We need…better treatment right now.”

Tiruveedhula is in the midst of developing a new compound to treat alcoholism, and a drug could be available to the market within five to six years. He presented his research at the 250th National Meeting & Exposition of the American Chemical Society (ACS).

“It is very exciting. We found a new way to treat alcoholism, rather than the traditional ways,” he said.

While the underlying causes of alcoholism vary, and can be quite specific to a single person, researchers know alcohol affects the brain’s reward center by releasing dopamine. The neurotransmitter increases in response to pleasurable behavior.

According to Tiruveedhula, current drugs meant to combat alcoholism focus on dopamine, and attempt to dampen the rewards from alcohol-based stimuli. But these opioid antagonists could have adverse side effects. One unintended result is anhedonia, or the inability to feel pleasure, said Tiruveedhula.

Tiruveedhula synthesized a beta-carboline compound, 3-PBC·HCl, that diminished drinking in rats bred to crave alcohol. Additionally, he simplified the production process from eight to two steps, while increasing production yield tenfold.

He worked with James Cook, a chemist at the Univ. of Wisconsin, Milwaukee and Harry June, a psychopharmacologist at Howard Univ. to test the compound in a laboratory setting.

The researchers observed the compound didn’t induce side effects, such as depression or the inability to experience pleasure, according to ACS.

“What excites me is the compounds are orally active, and they don’t cause depression like some drugs do,” Cook said.

The promising effects have caused the researchers to test the compound in additional animal studies. The team is working with several other beta-carboline compounds as well.

“Alcoholism is a major problem in the U.S.,” said V. V. N. Phani Babu Tiruveedhula, of the Univ. of Wisconsin, Milwaukee. “Alcohol abuse costs almost $220 billion to the U.S. economy every year. That’s a shocking number. We need…better treatment right now.”

Tiruveedhula is in the midst of developing a new compound to treat alcoholism, and a drug could be available to the market within five to six years. He presented his research at the 250th National Meeting & Exposition of the American Chemical Society (ACS).

“It is very exciting. We found a new way to treat alcoholism, rather than the traditional ways,” he said.

While the underlying causes of alcoholism vary, and can be quite specific to a single person, researchers know alcohol affects the brain’s reward center by releasing dopamine. The neurotransmitter increases in response to pleasurable behavior.

According to Tiruveedhula, current drugs meant to combat alcoholism focus on dopamine, and attempt to dampen the rewards from alcohol-based stimuli. But these opioid antagonists could have adverse side effects. One unintended result is anhedonia, or the inability to feel pleasure, said Tiruveedhula.

Tiruveedhula synthesized a beta-carboline compound, 3-PBC·HCl, that diminished drinking in rats bred to crave alcohol. Additionally, he simplified the production process from eight to two steps, while increasing production yield tenfold.

He worked with James Cook, a chemist at the Univ. of Wisconsin, Milwaukee and Harry June, a psychopharmacologist at Howard Univ. to test the compound in a laboratory setting.

The researchers observed the compound didn’t induce side effects, such as depression or the inability to experience pleasure, according to ACS.

“What excites me is the compounds are orally active, and they don’t cause depression like some drugs do,” Cook said.

The promising effects have caused the researchers to test the compound in additional animal studies. The team is working with several other beta-carboline compounds as well.

Superlattice design realizes elusive multiferroic properties

From the spinning disc of a computer’s hard drive to the varying current in a transformer, many technological devices work by merging electricity and magnetism. But the search to find a single material that combines both electric polarizations and magnetizations remains challenging.

This elusive class of materials is called multiferroics, which combine two or more primary ferroic properties. Northwestern Engineering’s James Rondinelli and his research team are interested in combining ferromagnetism and ferroelectricity, which rarely coexist in one material at room temperature.

“Researchers have spent the past decade or more trying to find materials that exhibit these properties,” said Rondinelli, assistant professor of materials science and engineering at the McCormick School of Engineering. “If such materials can be found, they are both interesting from a fundamental perspective and yet even more attractive for technological applications.”

In order for ferroelectricity to exist, the material must be insulating. For this reason, nearly every approach to date has focused on searching for multiferroics in insulating magnetic oxides. Rondinelli’s team started with a different approach. They instead used quantum mechanical calculations to study a metallic oxide, lithium osmate, with a structural disposition to ferroelectricity and sandwiched it between an insulating material, lithium niobate.

While lithium osmate is a non-magnetic and non-insulating metal, lithium niobate is insulating and ferroelectric but also non-magnetic. By alternating the two materials, Rondinelli created a superlattice that—at the quantum scale—became insulating, ferromagnetic and ferroelectric at room temperature.

“The polar metal became insulating through an electronic phase transition,” Rondinelli explained. “Owing to the physics of the enhanced electron-electron interactions in the superlattice, the electronic transition induces an ordered magnetic state.”

Supported by the Army Research Office and the US Department of Defense, the research appears in Physical Review Letters. Danilo Puggioni, a postdoctoral fellow in Rondinelli’s lab, is the paper’s first author, who is joined by collaborators at the International School for Advanced Studies in Trieste, Italy.

This new design strategy for realizing multiferroics could open up new possibilities for electronics, including logic processing and new types of memory storage. Multiferroic materials also hold potential for low-power electronics as they offer the possibility to control magnetic polarizations with an electric field, which consumes much less energy.

“Our work has turned the paradigm upside down,” Rondinelli said. “We show that you can start with metallic oxides to make multiferroics.”

Source: Northwestern Univ.

This elusive class of materials is called multiferroics, which combine two or more primary ferroic properties. Northwestern Engineering’s James Rondinelli and his research team are interested in combining ferromagnetism and ferroelectricity, which rarely coexist in one material at room temperature.

“Researchers have spent the past decade or more trying to find materials that exhibit these properties,” said Rondinelli, assistant professor of materials science and engineering at the McCormick School of Engineering. “If such materials can be found, they are both interesting from a fundamental perspective and yet even more attractive for technological applications.”

In order for ferroelectricity to exist, the material must be insulating. For this reason, nearly every approach to date has focused on searching for multiferroics in insulating magnetic oxides. Rondinelli’s team started with a different approach. They instead used quantum mechanical calculations to study a metallic oxide, lithium osmate, with a structural disposition to ferroelectricity and sandwiched it between an insulating material, lithium niobate.

While lithium osmate is a non-magnetic and non-insulating metal, lithium niobate is insulating and ferroelectric but also non-magnetic. By alternating the two materials, Rondinelli created a superlattice that—at the quantum scale—became insulating, ferromagnetic and ferroelectric at room temperature.

“The polar metal became insulating through an electronic phase transition,” Rondinelli explained. “Owing to the physics of the enhanced electron-electron interactions in the superlattice, the electronic transition induces an ordered magnetic state.”

Supported by the Army Research Office and the US Department of Defense, the research appears in Physical Review Letters. Danilo Puggioni, a postdoctoral fellow in Rondinelli’s lab, is the paper’s first author, who is joined by collaborators at the International School for Advanced Studies in Trieste, Italy.

This new design strategy for realizing multiferroics could open up new possibilities for electronics, including logic processing and new types of memory storage. Multiferroic materials also hold potential for low-power electronics as they offer the possibility to control magnetic polarizations with an electric field, which consumes much less energy.

“Our work has turned the paradigm upside down,” Rondinelli said. “We show that you can start with metallic oxides to make multiferroics.”

Source: Northwestern Univ.

Saturday, August 15, 2015

Real-Time Process Measurement: A Sea Change in Manufacturing

In a world where most information is available in an instant, plant managers and engineers are continuously trying to find ways to improve the efficiency of processes along the manufacturing line. Analyzing these processes can be a difficult task. Until recently, days of laboratory work were often required to analyze any given sample segment or process in a manufacturing line. If there was a problem that needed correction, it could sometimes take days to discover. Meanwhile, those days spent analyzing became lost production time.

There’s an unlimited number of attributes that manufacturers are looking to measure on the plant floor—everything from color mixtures to chemical baths need to be constantly tested, analyzed and corrected. Without a process measurement system in place to give real-time feedback, this can be an impossible task. However, recent implementations based on the science of spectroscopy are revealing themselves ideal for manufacturers looking to benefit from real-time process measurement.

How does it work?

Spectroscopy is the study of the interaction between matter and radiated energy. While spectroscopy can be performed using any portion of the electromagnetic spectrum, from x-rays to radio waves, its use in process measurement typically involves the portion from the ultraviolet through the infrared that’s generically termed “light”. As it is applied to process measurement, spectroscopy typically means illuminating the sample stream with a known range of light and carefully analyzing the reflected or absorbed light to extract chemical information about the sample. Because different samples and molecules interact with light in unique ways, this approach makes it possible to not only detect the proverbial needle in a haystack, but also quantify the amount of the needle or even multiple needles with high accuracy. Perhaps, more importantly, all of this analysis can be performed in-situ, within the sample stream, in seconds or less.

There are four main wavelength regions of light typically used in spectroscopy-based process measurement: ultraviolet (UV), visible (VIS), near-infrared (NIR) and infrared (IR). UV spectroscopy is the measurement of the very short wavelengths outside the blue-violet color human eyes can perceive. This type of spectroscopy is best suited for the measurement of very low concentrations of a target molecule in a liquid stream. VIS spectroscopy is the measurement of light that a person’s eyes perceive as color, but with high sensitivity to accurately determine a wide range of product color attributes, brightness and clarity. NIR spectroscopy measures light just beyond the red color human eyes can perceive, where it interacts with the molecules to provide chemical detection and quantification at high speed. The molecular interactions that occur with NIR light are overtones of their fundamental interactions in the IR, so the fast measurement speed in the NIR does come at the expense of sensitivity, and NIR is typically used to quantify target species at percent-level concentrations. IR spectroscopy measures light even longer than the NIR, well beyond what can be seen and into the ranges that we feel as heat. This light directly probes molecular bonds and can be used to detect and quantify a wide range of target molecules to very low concentrations, even in gas phase.

While the target molecule and its environment define which spectroscopy best serves the application, obtaining the optical signal from the sample is only half of the solution. Converting that optical information into something useful, such as the concentration of a target compound in a process stream, entails a combination of signal processing and statistics that has become a science of its own, called chemometrics. Improvements in these chemometric methods, along with advances in the optical components comprising the process analyzers, can now deliver information about processes that was unavailable in the past, and certainly wasn’t available in such convenient forms. The technology provides chemical and physical information in-line that’s non-destructive, instant and requires no sample preparation. It also requires no additional chemicals, no hazardous waste products and no trained technicians to operate.

A Reveal E-Series used in a blend uniformity application measuring active pharmaceutical ingredient (APIs). Image: Prozess Technologie

A Reveal E-Series used in a blend uniformity application measuring active pharmaceutical ingredient (APIs). Image: Prozess Technologie

So why is this different from spectroscopic solutions available in the past?

The biggest reason there’s more attention paid to spectroscopy lately is the revolutionary new way these process measurement devices are packaged. What once took a symphony of engineers and scientists to analyze the process and deduce results can now be done by nearly anyone working on the plant floor. Process measurement devices using spectroscopy can now be controlled via any Web-enabled device or, conventionally, within the automation control systems in the factory. Users are able to control the analyzer, stream real-time data, log operator activity and monitor output from anywhere they desire, whether inside the plant or across the globe.

Spectroscopic process measurement solutions are also gaining popularity because of their versatility. The applications are nearly limitless, and include such varied purposes as chemical quantification, cleaning validation, color measurement, reaction monitoring, ensuring blending uniformity and moisture determination. A number of interfaces are available to connect the analyzer to the process stream, including reflectance probes, dip probes and flow-through cells, that can either be directly connected to the process analyzer or connected remotely via fiber optics. Furthermore, liquids, solids, powders and gases all can be analyzed using spectroscopy. And, as opposed to chemical sensors and other analytical methods employed for in-line process measurement, optical spectroscopy allows the same system to measure a number of parameters simultaneously and be reprogrammed should there be changes to the process stream composition.

It’s easy to see why those in manufacturing environments are beginning to see these solutions as not only viable, but critical. User-ready spectroscopic process analyzers are no longer cost prohibitive nor labor intensive. Improved manufacturing efficiency and product quality afforded by real-time, in-line process analyzers are invaluable. As companies continue to explore ways to differentiate their operations from that of the competition, it’s more evident that implementing an efficient process measurement system is a critical piece of the puzzle.

There’s an unlimited number of attributes that manufacturers are looking to measure on the plant floor—everything from color mixtures to chemical baths need to be constantly tested, analyzed and corrected. Without a process measurement system in place to give real-time feedback, this can be an impossible task. However, recent implementations based on the science of spectroscopy are revealing themselves ideal for manufacturers looking to benefit from real-time process measurement.

How does it work?

Spectroscopy is the study of the interaction between matter and radiated energy. While spectroscopy can be performed using any portion of the electromagnetic spectrum, from x-rays to radio waves, its use in process measurement typically involves the portion from the ultraviolet through the infrared that’s generically termed “light”. As it is applied to process measurement, spectroscopy typically means illuminating the sample stream with a known range of light and carefully analyzing the reflected or absorbed light to extract chemical information about the sample. Because different samples and molecules interact with light in unique ways, this approach makes it possible to not only detect the proverbial needle in a haystack, but also quantify the amount of the needle or even multiple needles with high accuracy. Perhaps, more importantly, all of this analysis can be performed in-situ, within the sample stream, in seconds or less.

There are four main wavelength regions of light typically used in spectroscopy-based process measurement: ultraviolet (UV), visible (VIS), near-infrared (NIR) and infrared (IR). UV spectroscopy is the measurement of the very short wavelengths outside the blue-violet color human eyes can perceive. This type of spectroscopy is best suited for the measurement of very low concentrations of a target molecule in a liquid stream. VIS spectroscopy is the measurement of light that a person’s eyes perceive as color, but with high sensitivity to accurately determine a wide range of product color attributes, brightness and clarity. NIR spectroscopy measures light just beyond the red color human eyes can perceive, where it interacts with the molecules to provide chemical detection and quantification at high speed. The molecular interactions that occur with NIR light are overtones of their fundamental interactions in the IR, so the fast measurement speed in the NIR does come at the expense of sensitivity, and NIR is typically used to quantify target species at percent-level concentrations. IR spectroscopy measures light even longer than the NIR, well beyond what can be seen and into the ranges that we feel as heat. This light directly probes molecular bonds and can be used to detect and quantify a wide range of target molecules to very low concentrations, even in gas phase.

While the target molecule and its environment define which spectroscopy best serves the application, obtaining the optical signal from the sample is only half of the solution. Converting that optical information into something useful, such as the concentration of a target compound in a process stream, entails a combination of signal processing and statistics that has become a science of its own, called chemometrics. Improvements in these chemometric methods, along with advances in the optical components comprising the process analyzers, can now deliver information about processes that was unavailable in the past, and certainly wasn’t available in such convenient forms. The technology provides chemical and physical information in-line that’s non-destructive, instant and requires no sample preparation. It also requires no additional chemicals, no hazardous waste products and no trained technicians to operate.

A Reveal E-Series used in a blend uniformity application measuring active pharmaceutical ingredient (APIs). Image: Prozess Technologie

A Reveal E-Series used in a blend uniformity application measuring active pharmaceutical ingredient (APIs). Image: Prozess Technologie

So why is this different from spectroscopic solutions available in the past?

The biggest reason there’s more attention paid to spectroscopy lately is the revolutionary new way these process measurement devices are packaged. What once took a symphony of engineers and scientists to analyze the process and deduce results can now be done by nearly anyone working on the plant floor. Process measurement devices using spectroscopy can now be controlled via any Web-enabled device or, conventionally, within the automation control systems in the factory. Users are able to control the analyzer, stream real-time data, log operator activity and monitor output from anywhere they desire, whether inside the plant or across the globe.

Spectroscopic process measurement solutions are also gaining popularity because of their versatility. The applications are nearly limitless, and include such varied purposes as chemical quantification, cleaning validation, color measurement, reaction monitoring, ensuring blending uniformity and moisture determination. A number of interfaces are available to connect the analyzer to the process stream, including reflectance probes, dip probes and flow-through cells, that can either be directly connected to the process analyzer or connected remotely via fiber optics. Furthermore, liquids, solids, powders and gases all can be analyzed using spectroscopy. And, as opposed to chemical sensors and other analytical methods employed for in-line process measurement, optical spectroscopy allows the same system to measure a number of parameters simultaneously and be reprogrammed should there be changes to the process stream composition.

It’s easy to see why those in manufacturing environments are beginning to see these solutions as not only viable, but critical. User-ready spectroscopic process analyzers are no longer cost prohibitive nor labor intensive. Improved manufacturing efficiency and product quality afforded by real-time, in-line process analyzers are invaluable. As companies continue to explore ways to differentiate their operations from that of the competition, it’s more evident that implementing an efficient process measurement system is a critical piece of the puzzle.

Next-gen illumination using silicon quantum dot-based white-blue LED

A Si quantum dot (QD)-based hybrid inorganic/organic light-emitting diode (LED) that exhibits white-blue electroluminescence has been fabricated by Prof. Ken-ichi Saitow (Natural Science Center for Basic Research and Development, Hiroshima Univ.), graduate student Yunzi Xin and their collaborators. A hybrid LED is expected to be a next-generation illumination device for producing flexible lighting and display, and this is achieved for the Si QD-based white-blue LED. A description appears in Applied Physics Letters.

The Si QD hybrid LED was developed using a simple method; almost all processes were solution-based and conducted at ambient temperature and pressure. Conductive polymer solutions and a colloidal Si QD solution were deposited on the glass substrate. The current and optical power densities of the LED are, respectively, 280 and 350 times greater than those reported previously for such a device at the same voltage (6 V). In addition, the active area of the LED is 4 mm2, which is 40 times larger than that of a typical commercial LED; the thickness of the LED is 0.5 mm.

Prof. Saitow stated, "QD LED has attracted significant attention as a next-generation LED. Although several breakthroughs will be required for achieving implementation, a QD-based hybrid LED allows us to give so fruitful feature that we cannot imagine."

Source: Hiroshima Univ.

The Si QD hybrid LED was developed using a simple method; almost all processes were solution-based and conducted at ambient temperature and pressure. Conductive polymer solutions and a colloidal Si QD solution were deposited on the glass substrate. The current and optical power densities of the LED are, respectively, 280 and 350 times greater than those reported previously for such a device at the same voltage (6 V). In addition, the active area of the LED is 4 mm2, which is 40 times larger than that of a typical commercial LED; the thickness of the LED is 0.5 mm.

Prof. Saitow stated, "QD LED has attracted significant attention as a next-generation LED. Although several breakthroughs will be required for achieving implementation, a QD-based hybrid LED allows us to give so fruitful feature that we cannot imagine."

Source: Hiroshima Univ.

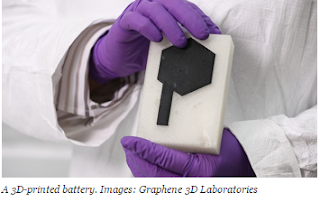

The Possibilities of 3D Printing: It’s Only the Beginning

The future of 3D printing is bright and full of exciting promise. But the most intriguing scenario for this technology isn’t in the manufacture of objects we see every day—that will only be a small niche in the 3D-printing industry. Instead, 3D printing will realize its full potential when it enables people to innovate and create all new objects and devices in a one-touch process.

3D printing allows for distributed manufacturing—meaning products can be created on demand in a facility nearby. In the near future, this will allow consumers to purchase goods which fit their very specific needs. It will also have these goods printed and shipped in a matter of hours, as opposed to the weeks it can take to receive a custom item.

Furthermore, 3D printing will allow people to exchange their creative designs quickly and easily, from a new take on an everyday objects to an entirely new electronic device. The ability to create and share like never before is what really makes 3D printing the process of the future. While it has endless possibilities to improve the world around us, it’s still in the early stage of commercial development. Currently, 3D-printing technology allows people to print parts for a broken washing machine, granted the part that’s broken doesn’t need to withstand high force and can be made out of plastic. But within just five years, I foresee 3D printers capable of printing high-quality parts on-demand; and within 10 to 15 years, we will see at-home 3D printing for the majority of needs.

So what are some hurdles that need to be overcome to achieve these big feats? The two biggest problems are there are limits in production capabilities and 3D printers aren’t easy to use. But when 3D printing evolves into a simple-push button process, people will be able to go to a local store and use a 3D printer or use one at home to print useful items, as opposed to just models.